21 Unicode – a brief introduction (advanced)

Unicode is a standard for representing and managing text in most of the world’s writing systems. Virtually all modern software that works with text, supports Unicode. The standard is maintained by the Unicode Consortium. A new version of the standard is published every year (with new emojis, etc.). Unicode version 1.0.0 was published in October 1991.

21.1 Code points vs. code units

Two concepts are crucial for understanding Unicode:

- Code points are numbers that represent the atomic parts of Unicode text. Most of them represent visible symbols but they can also have other meanings such as specifying an aspect of a symbol (the accent of a letter, the skin tone of an emoji, etc.).

- Code units are numbers that encode code points, to store or transmit Unicode text. One or more code units encode a single code point. Each code unit has the same size, which depends on the encoding format that is used. The most popular format, UTF-8, has 8-bit code units.

21.1.1 Code points

The first version of Unicode had 16-bit code points. Since then, the number of characters has grown considerably and the size of code points was extended to 21 bits. These 21 bits are partitioned in 17 planes, with 16 bits each:

-

Plane 0: Basic Multilingual Plane (BMP), 0x0000–0xFFFF

- Contains characters for almost all modern languages (Latin characters, Asian characters, etc.) and many symbols.

-

Plane 1: Supplementary Multilingual Plane (SMP), 0x10000–0x1FFFF

- Supports historic writing systems (e.g., Egyptian hieroglyphs and cuneiform) and additional modern writing systems.

- Supports emojis and many other symbols.

-

Plane 2: Supplementary Ideographic Plane (SIP), 0x20000–0x2FFFF

- Contains additional CJK (Chinese, Japanese, Korean) ideographs.

- Plane 3–13: Unassigned

-

Plane 14: Supplementary Special-Purpose Plane (SSP), 0xE0000–0xEFFFF

- Contains non-graphical characters such as tag characters and glyph variation selectors.

-

Plane 15–16: Supplementary Private Use Area (S PUA A/B), 0x0F0000–0x10FFFF

- Available for character assignment by parties outside the ISO and the Unicode Consortium. Not standardized.

Planes 1-16 are called supplementary planes or astral planes.

Let’s check the code points of a few characters:

> 'A'.codePointAt(0).toString(16)

'41'

> 'ü'.codePointAt(0).toString(16)

'fc'

> 'π'.codePointAt(0).toString(16)

'3c0'

> '🙂'.codePointAt(0).toString(16)

'1f642'

The hexadecimal numbers of the code points tell us that the first three characters reside in plane 0 (within 16 bits), while the emoji resides in plane 1.

21.1.2 Encoding Unicode code points: UTF-32, UTF-16, UTF-8

The main ways of encoding code points are three Unicode Transformation Formats (UTFs): UTF-32, UTF-16, UTF-8. The number at the end of each format indicates the size (in bits) of its code units.

21.1.2.1 UTF-32 (Unicode Transformation Format 32)

UTF-32 uses 32 bits to store code units, resulting in one code unit per code point. This format is the only one with fixed-length encoding; all others use a varying number of code units to encode a single code point.

21.1.2.2 UTF-16 (Unicode Transformation Format 16)

UTF-16 uses 16-bit code units. It encodes code points as follows:

-

The BMP (first 16 bits of Unicode) is stored in single code units.

-

Astral planes: The BMP comprises 0x10_000 code points. Given that Unicode has a total of 0x110_000 code points, we still need to encode the remaining 0x100_000 code points (20 bits). The BMP has two ranges of unassigned code points that provide the necessary storage:

- Most significant 10 bits (leading surrogate, high surrogate): 0xD800-0xDBFF

- Least significant 10 bits (trailing surrogate, low surrogate): 0xDC00-0xDFFF

As a consequence, each UTF-16 code unit is either:

- A BMP code point (a scalar)

- A leading surrogate

- A trailing surrogate

If a surrogate appears on its own, without its partner, it is called a lone surrogate.

This is how the bits of the code points are distributed among the surrogates:

0bhhhhhhhhhhllllllllll // code point - 0x100000b110110hhhhhhhhhh // 0xD800 + 0bhhhhhhhhhh0b110111llllllllll // 0xDC00 + 0bllllllllll

Each character of a JavaScript string is a UTF-16 code unit. As an example, consider code point 0x1F642 (🙂) that is represented by two UTF-16 code units – 0xD83D and 0xDE42:

> '🙂'.codePointAt(0).toString(16)

'1f642'

> '🙂'.length

2

> '🙂'.split('')

[ '\uD83D', '\uDE42' ]

Let’s derive the code units from the code point:

> (0x1F642 - 0x10000).toString(2).padStart(20, '0')

'00001111011001000010'

> (0xD800 + 0b0000111101).toString(16)

'd83d'

> (0xDC00 + 0b1001000010).toString(16)

'de42'

In contrast, code point 0x03C0 (π) is part of the BMP and therefore represented by a single UTF-16 code unit – 0x03C0:

> 'π'.length

1

21.1.2.3 UTF-8 (Unicode Transformation Format 8)

UTF-8 has 8-bit code units. It uses 1–4 code units to encode a code point:

| Code points | Code units |

|---|---|

| 0000–007F | 0bbbbbbb (7 bits) |

| 0080–07FF | 110bbbbb, 10bbbbbb (5+6 bits) |

| 0800–FFFF | 1110bbbb, 10bbbbbb, 10bbbbbb (4+6+6 bits) |

| 10000–1FFFFF | 11110bbb, 10bbbbbb, 10bbbbbb, 10bbbbbb (3+6+6+6 bits) |

Notes:

-

The bit prefix of each code unit tells us:

- Is it first in a series of code units? If yes, how many code units will follow?

- Is it second or later in a series of code units?

- The character mappings in the 0000–007F range are the same as ASCII, which leads to a degree of backward compatibility with older software.

Three examples:

| Character | Code point | Code units |

|---|---|---|

| A | 0x0041 | 01000001 |

| π | 0x03C0 | 11001111, 10000000 |

| 🙂 | 0x1F642 | 11110000, 10011111, 10011001, 10000010 |

21.2 Encodings used in web development: UTF-16 and UTF-8

The Unicode encoding formats that are used in web development are: UTF-16 and UTF-8.

21.2.1 Source code internally: UTF-16

The ECMAScript specification internally represents source code as UTF-16.

21.2.2 Strings: UTF-16

The characters in JavaScript strings are based on UTF-16 code units:

> const smiley = '🙂';

> smiley.length

2

> smiley === '\uD83D\uDE42' // code units

true

For more information on Unicode and strings, see “Atoms of text: code points, JavaScript characters, grapheme clusters” (§22.7).

21.2.3 Source code in files: UTF-8

HTML and JavaScript files are almost always encoded as UTF-8 now.

For example, this is how HTML files usually start now:

<!doctype html>

<html>

<head>

<meta charset="UTF-8">

···

21.3 Grapheme clusters – the real characters

The concept of a character becomes remarkably complex once we consider the various writing systems of the world. That’s why there are several different Unicode terms that all mean “character” in some way: code point, grapheme cluster, glyph, etc.

In Unicode, a code point is an atomic part of text stored in a computer.

However, a grapheme cluster corresponds most closely to a symbol displayed on screen or paper. It is defined as “a horizontally segmentable unit of text”. Therefore, official Unicode documents also call it a user-perceived character. One or more code points are needed to encode a grapheme cluster.

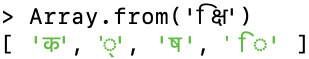

For example, the Devanagari kshi is encoded by 4 code points. We use Array.from() to split the string into an Array with code points:

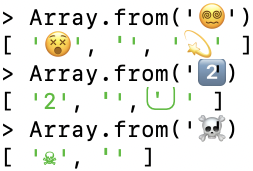

Many emojis are composed of multiple code points:

Flag emojis are grapheme clusters and composed of two code points – for example, the flag of Japan:

21.3.1 Grapheme clusters vs. glyphs

A symbol is an abstract concept and part of written language:

- It is represented in computer memory by a grapheme cluster – a sequence of one or more code points (numbers).

- It is drawn on screen via glyphs. A glyph is an image and usually stored in a font. More than one glyph may be used to draw a single symbol – for example, the symbol “é” may be drawn by combining the glyph “e” with the glyph “´”.

The distinction between a concept and its representation is subtle and can blur when talking about Unicode.

More information on grapheme clusters

For more information, see “Let’s Stop Ascribing Meaning to Code Points” by Manish Goregaokar.